New

Latest Updates

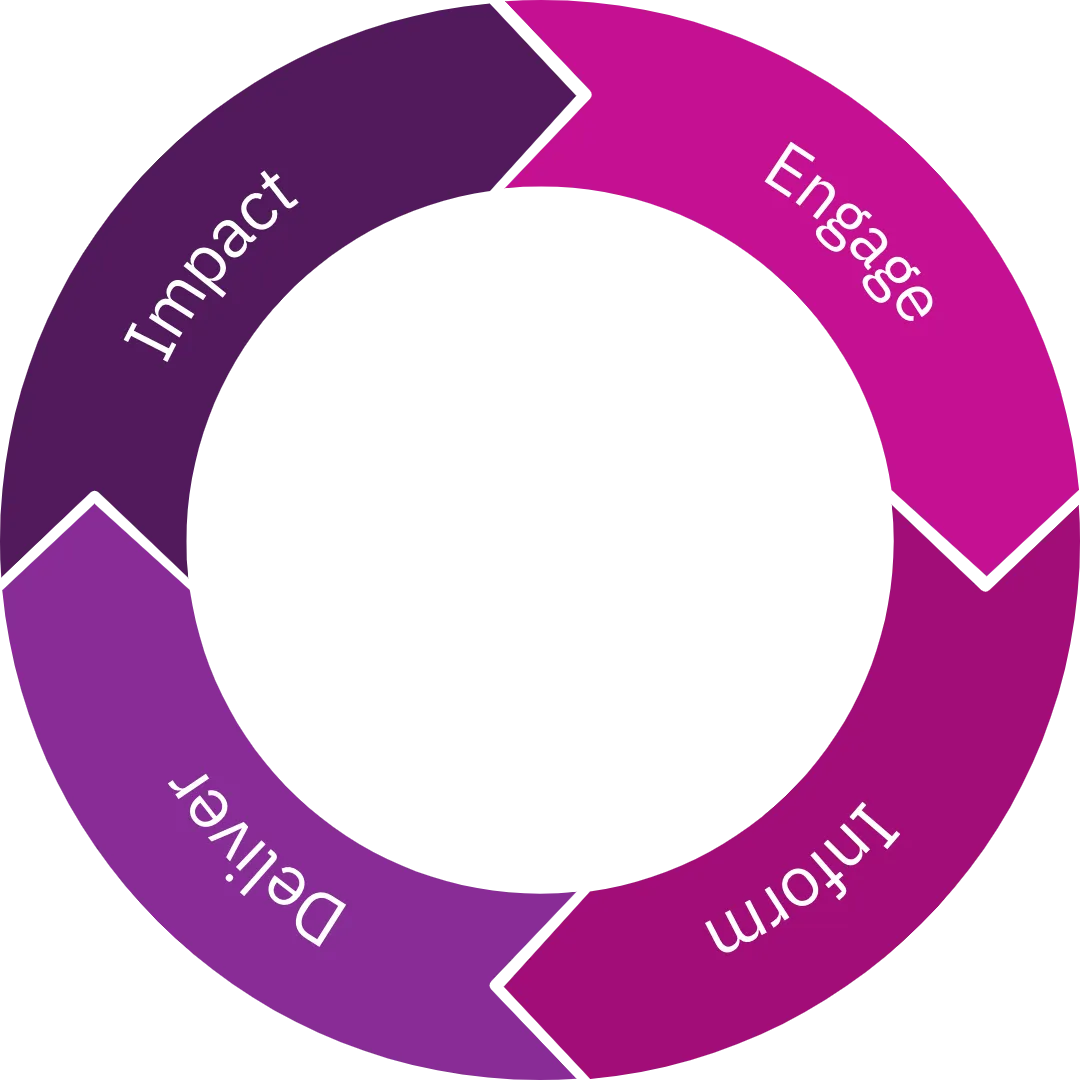

Services

How I Help

I deliver bespoke consulting engagements to transform call centre security. My services address every stage of your call centre security journey - from building the business case through implementation and optimisation packaged for your unique needs.